Entity Framework Core Optimization Techniques

It is necessary that we Optimize our Entity Framework Core codes so that the application remains light weight and at the same time executes faster. We can perform the optimizing techniques in EF Core by understanding which technique is best to use in a given situation. Let’s discuss some of the most important ones one by one.

Optimizing techniques for Tracking and No-Tracking Queries

By default, queries returning entity types are tracking. Entity Framework Core maintains all the infromation about a Tracking Entity in it’s change tracker. The changes on the tracking entities are saved to the database when SaveChanges method is called.

Let’s see the method which retrives Department table records from the database in the “Department” tracking query.

var dept = await context.Department;Here, first EF Core checks if the Deparment entity is already in it’s context. If it finds it, then the same instance of Department entity is returned. This is fast and takes less memory. If the Department entity isn’t found in the context, then EF Core creates a new Department entity instance and attaches it to the context. In short, EF Core will return same entity instance if it is already in the change tracker.

No-Tracking Queries are those whose information Entity Framework Core does not track in it’s change tracker. They are something like a read only data, and are best to use during read only areas of the app, where information don’t needs to be updated on the database.

By the use of AsNoTracking method we can mark entities as No-Tracking ones.

var dept = await context.Department.AsNoTracking();The default tracking behavior can be changed at the context instance level as shown below:

context.ChangeTracker.QueryTrackingBehavior = QueryTrackingBehavior.NoTracking;

var dept = await context.Department;

Identity Resolution

Identity Resolution means Entity Framework Core tracks a Tracking entity by it’s primary key. If there are multiple instances of an entity with the same primary key then EF Core resolve them to a same instance. Identity Note that Identity Resolution is absent for No-Tracking entities.

We already discussed that EF Core returns the same entity instance if it is already being tracked. But what will happen for a No-Tracking entity?

For a No-Tracking entity EF Core has to return a new instance of the entity each and every time since the entity is not tracked. This can bring slowness to the app when more and more No-Queries entities are called.

However, this is not the end of the road as their is a stand-alone change tracker that can be used in the background when generating query results for No-Tracking entities so that each instance is transpire only once. The AsNoTrackingWithIdentityResolution method can be applied to a No-Tracking entity to make it have an Identity Resolution.

In the below code the Department entity is a No-Tracking entity with Identity Resolution.

var dept = await context.Department.AsNoTrackingWithIdentityResolution();This change tracker does not track the changes made to the entity, and after an entity is returned it goes out of scope and is garbage collected.

Custom Projections

If the query return a non-entity then EF Core will not track it. In the below example, only the Name and AddedOn fields are returned from the Department entity so in this case Entity Framework Core will not track the Department entity.

var dept = context.Department

.Select( b => new { Name = b.Name, AddedOn = b.Created }); In the below example Department entity is returned along with the count of the departments. Since resultset contains an entity (which is Department Entity) therefore the Department entity will be tracked.

var dept = context.Department

.Select(b => new { Department = b, Count = b.Count() });Optimizing techniques for Client and Server Evaluation

All Queries undergo Evaluation process where EF Core breaks them into 2 parts:

- Client Evaluation – Part which it can itself evaluate.

- Server Evaluation – Part which it sends to the database provider to evaluate.

These 2 evaluation processes will convert the Query in LINQ form to a corresponding SQL query which can be executed on the database. For example – LINQ query var dept = context.Department; is converted to a SQL query – Select * from Department.

Supported & Unsupported Client Evaluation

The below example is for a Supported Client Evaluation by EF Core. The method StandardizeUrl converts urls to https://. The database provider has no knowlege of how to deal with this method therefore EF Core will evaluate this method on the Client. The rest of the query is evaluated on the Server.

var blogs = await context.Blogs

.OrderByDescending(blog => blog.Rating)

.Select(

blog => new { Id = blog.BlogId, Url = StandardizeUrl(blog.Url) })

.ToListAsync();

public static string StandardizeUrl(string url)

{

url = url.ToLower();

if (!url.StartsWith("https://"))

{

url = string.Concat("https://", url);

}

return url;

}

Note that EF Core will make the entity trackable if the Client Evaluation is involved for it. Here, the Blog entity is passed to the client method StandardizeURL so EF Core will track the Blog instance.

In situations where client evaluation causes poor performance, EF Core is smart enough to block them and give runtime error. In the below query the StandardizeUrl method is now used in a where filter. This filter can’t be applied in the database, so all the data needs to be pulled into memory to apply the filter on the client. This causes poor performance.

Entity Framework Core blocks such client evaluation and throws a runtime exception.

var blogs = await context.Blogs.Where(blog => StandardizeUrl(blog.Url).Contains("dotnet")).ToListAsync();If we are sure that our Query will not result in poor performance then we can explicitly force EF core to perform it. We can use methods like AsEnumerable or ToList (AsAsyncEnumerable or ToListAsync for async) to force EF Core for client evaluation.

Using AsEnumerable would stream the results and using ToList would cause buffering by creating a list, which could take additional memory. In the below example we are using AsAsyncEnumerable to force the client evaluation.

var blogs = context.Blogs

.AsAsyncEnumerable()

.Where(blog => StandardizeUrl(blog.Url).Contains("dotnet"))

.ToListAsync();Optimizing techniques for Single and Split Queries

Entity Framework Core loads related entities by the use of SQL Joins. This is known as Single Query operation. Take an example of a Company having multiple Departments and Employees working in these departmentes. The Department entity has a related Employee entity and is given below.

public class Department

{

public int Id { get; set; }

public string Name { get; set; }

public ICollection<Employee> Employee { get; set; }

}

public class Employee

{

public int Id { get; set; }

public string Name { get; set; }

public string Designation { get; set; }

public int DepartmentId { get; set; }

public Department Department { get; set; } = null!;

}

When EF Core encounters the query to read Department and related Employee entities, see below.

var dept = await context.Department

.Include(b => b.Employee)

.Include(b => b.Work)

.ToListAsync();Then it will apply a SQL Left Join to execute a single query for it. This SQL query is shown below.

SELECT [d].[Id], [d].[Name], [e].[Id], [e].[DepartmentId], [e].[Designation], [e].[Name]

FROM [Department] AS [d]

LEFT JOIN [Employee] AS [e] ON [d].[Id] = [e].[DepartmentId]

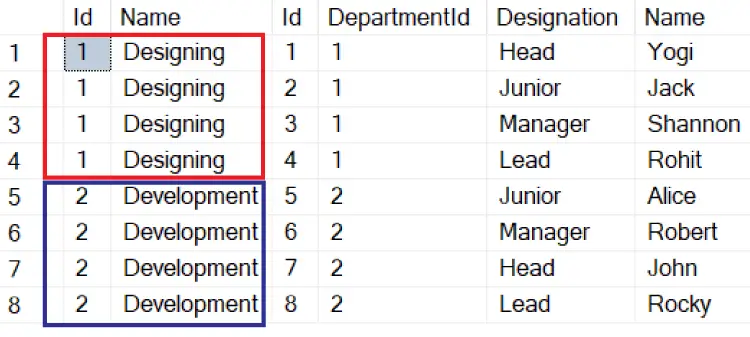

ORDER BY [d].[Id]This query will return all columns of Department and Employee tables. The columns of Department table (left side of Left Join) will be duplicated for each Employee table (right side of Left Join), which the department has.

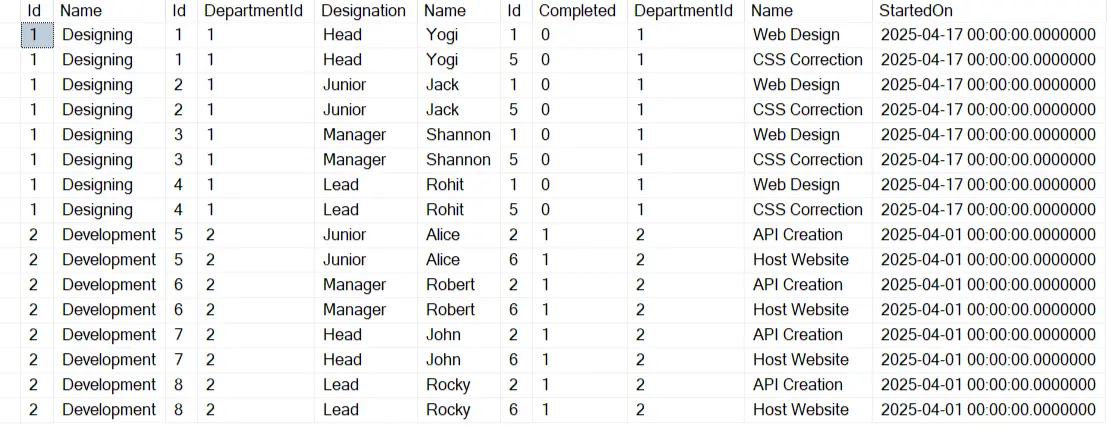

Check the below image which shows these duplication in red and blue blocks.

The problem can arise if the Department table has big columns then these columns will be duplicated. These will be send back to and fro the client to server multiple times during the course of different EF Core operations and will significantly increase network traffic and reduce the performance.

To optimize our codes we should omit these big columns in situations (by including only the columns which we need) where we don’t need them. This can be done through the LINQ Select method as shown below.

var dept = await context.Department

.Include(b => b.Employee)

.Select(b => new

{

b.Id,

b.Name,

b.Employee

})

.ToListAsync();

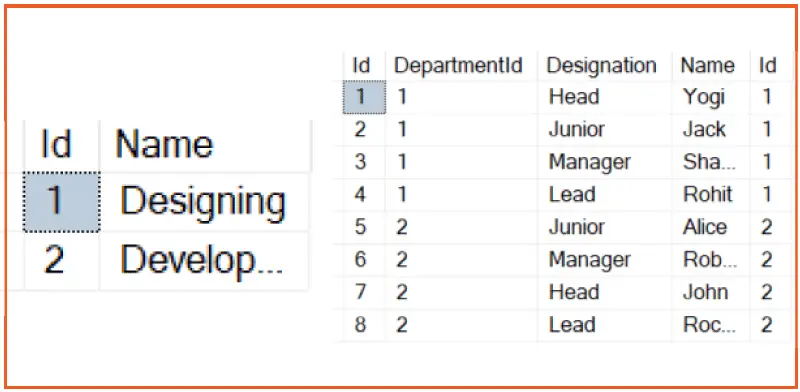

Split Query

Entity Framework Core has a way to deal with big and heavy columns by the use of Split query. EF Core splits the Single query into multiple smaller queries adding an additional SQL query for each included collection navigation. This removes the need for a LEF JOIN, which is causing duplicate records, between the tables.

We can implemented the above query by the use of AsSplitQuery() method so that this time EF Core will apply the Split query method.

The 2 SQL Queries generated in this case are given below.

SELECT [d].[Id], [d].[Name]

FROM [Department] AS [d]

ORDER BY [d].[Id]

SELECT [e].[Id], [e].[DepartmentId], [e].[Designation], [e].[Name], [d].[Id]

FROM [Department] AS [d]

INNER JOIN [Employee] AS [e] ON [d].[Id] = [e].[DepartmentId]

ORDER BY [d].[Id]Their result is shown by the image below:

The first SQL query retrieves all the Departments. The second query, in turn, retrieves their Employees. Notice the INNER JOIN has come into play which only returns those Department records that have a matching Employee. This is not the case for LEFT JOIN (used earlier) which gives all the records of the Departments (table on the left side of the LEFT Join) regardless of employees matching to it.

EF Core can combine the results of these queries into a collection of Department objects with Employees.

The resultset does not contain data duplicates. That in turn means that the amout of data transfers between the server and the app is lower than in the case of single querying.

Cartesian Explosion

Cartesian Explosion occurs when the rumber of recordsets which the database returns increases exponentially. It occurs when there are 2 or more Navigation property for an entity.

In the below example the Department entity has 2 Navigation properties – Employee and Work. Note that the navigation properties are at the same level.

public class Department

{

public int Id { get; set; }

public string Name { get; set; }

public ICollection<Employee> Employee { get; set; }

public ICollection<Work> Work { get; set; }

}

public class Employee

{

public int Id { get; set; }

public string Name { get; set; }

public string Designation { get; set; }

public int DepartmentId { get; set; }

public Department Department { get; set; } = null!;

}

public class Work

{

public int Id { get; set; }

public string Name { get; set; }

public bool Completed { get; set; }

public DateTime StartedOn { get; set; }

public int DepartmentId { get; set; }

public Department Department { get; set; } = null!;

}

When we read all the Departments with their related Employee and Work, which is shown by the below EF Core code.

var dept = await context.Department

.Include(b => b.Employee)

.Include(b => b.Work)

.ToListAsync();The SQL Query created by EF Core is:

SELECT [d].[Id], [d].[Name], [e].[Id], [e].[DepartmentId], [e].[Designation], [e].[Name], [w].[Id], [w].[Completed], [w].[DepartmentId], [w].[Name], [w].[StartedOn]

FROM [Department] AS [d]

LEFT JOIN [Employee] AS [e] ON [d].[Id] = [e].[DepartmentId]

LEFT JOIN [Work] AS [w] ON [d].[Id] = [w].[DepartmentId]

ORDER BY [d].[Id], [e].[Id]Here, a new LEFT JOIN is applied between the Work and Department tables.This means that if a given Department has 10 Employees and 10 Works, then the database returns 10*10 = 100 rows for that single Department. This phenomenon is called “Cartesian Explosion”. This causes huge amounts of data to get transferred and will be a major performance issue in database apps.

The below image shows the cartesian explosion caused by this query.

We can solve this problem by converting it to a split query by the use of AsSplitQuery() method.

var dept = await context.Department

.Include(b => b.Employee)

.Include(b => b.Work)

.AsSplitQuery()

.ToListAsync();

Now EF Core will convert it to 3 SQL queries. One returning all the Department records, second returning the Inner Join of Department and Employee records and the third returning the Inner Join of Department and Work records.

These SQL Queries are shown below.

SELECT [d].[Id], [d].[Name]

FROM [Department] AS [d]

ORDER BY [d].[Id]

SELECT [e].[Id], [e].[DepartmentId], [e].[Designation], [e].[Name], [d].[Id]

FROM [Department] AS [d]

INNER JOIN [Employee] AS [e] ON [d].[Id] = [e].[DepartmentId]

ORDER BY [d].[Id]

SELECT [w].[Id], [w].[Completed], [w].[DepartmentId], [w].[Name], [w].[StartedOn], [d].[Id]

FROM [Department] AS [d]

INNER JOIN [Work] AS [w] ON [d].[Id] = [w].[DepartmentId]

ORDER BY [d].[Id]

Note that the cartesian explosion does not occur when the two JOINs aren’t at the same level. See below example.

var dept = await context.Department

.Include(b => b.Employee)

.ThenInclude (b => b.Work)

.ToListAsync();Optimize Database calls and reduce size of Resultset

Whenever we access an entity through database context then Entity Framework Core calls the database to fetch the result set. We can make use of List type to store the result set and then extact the data from it, instead of making calls to the database again and again. See the below code where EF Core will be making database call 2 times.

var empall = context.Employee;

var empmatt = context.Employee.Where(e => e.Name == "Matt").FirstOrDefault(); We can reduce the calling to the database to just a single time by storing the result in a list type object and then subsiquently fetching a record from there. See the below code where we have done this thing.

var empall = context.Employee.ToList();

var empmatt = empall.Where(e => e.Name == "Matt").FirstOrDefault(); When we call an entity we are provided with all it’s fields. We should only pull back those fields that we need so that the unnecessary heaviness of the result set is reduced. For example in the below query we are only pulling the Name field for the entity.

var empmall = context.Employee.Select(b => b.Name); Similary we are only needing the name and designation of an employee which is done through the below linq code.

var empmatt = context.Employee.Where(e => e.Name == "Matt").Select(b => new {b.Name, b.Designation}).FirstOrDefault(); During reading of records, rather than fetching all at once, we should fetch them based on page by page manner. This should be done when we implement pagination feature. LINQ Skip and Take operators are used to implement this thing. Skip tells to bipass a given number of records from the start and Take tells to fetch a given number of records from there.

See the below code which are providing us the records for the page numbers 1, 2 and 3. Page size being set to 10 records per page.

var emp_page_One = context.Employee.Skip(0).Take(10); // gets page 1 records

var emp_page_Two = context.Employee.Skip(20).Take(10); // gets page 2 records

var emp_page_Three = context.Employee.Skip(30).Take(10); // gets page 3 records You can certainly read more about this concept of pagination in our article Create Number Paging with Custom Tag Helper in ASP.NET Core.

Performance Diagnosis

There are certain ways by which we can perform diagnosis of our Entity Framework Core codes and detect the cause of problems that are making our app slow. These are through –

- EF Core Logging

- Benchmarking

EF Core Logging

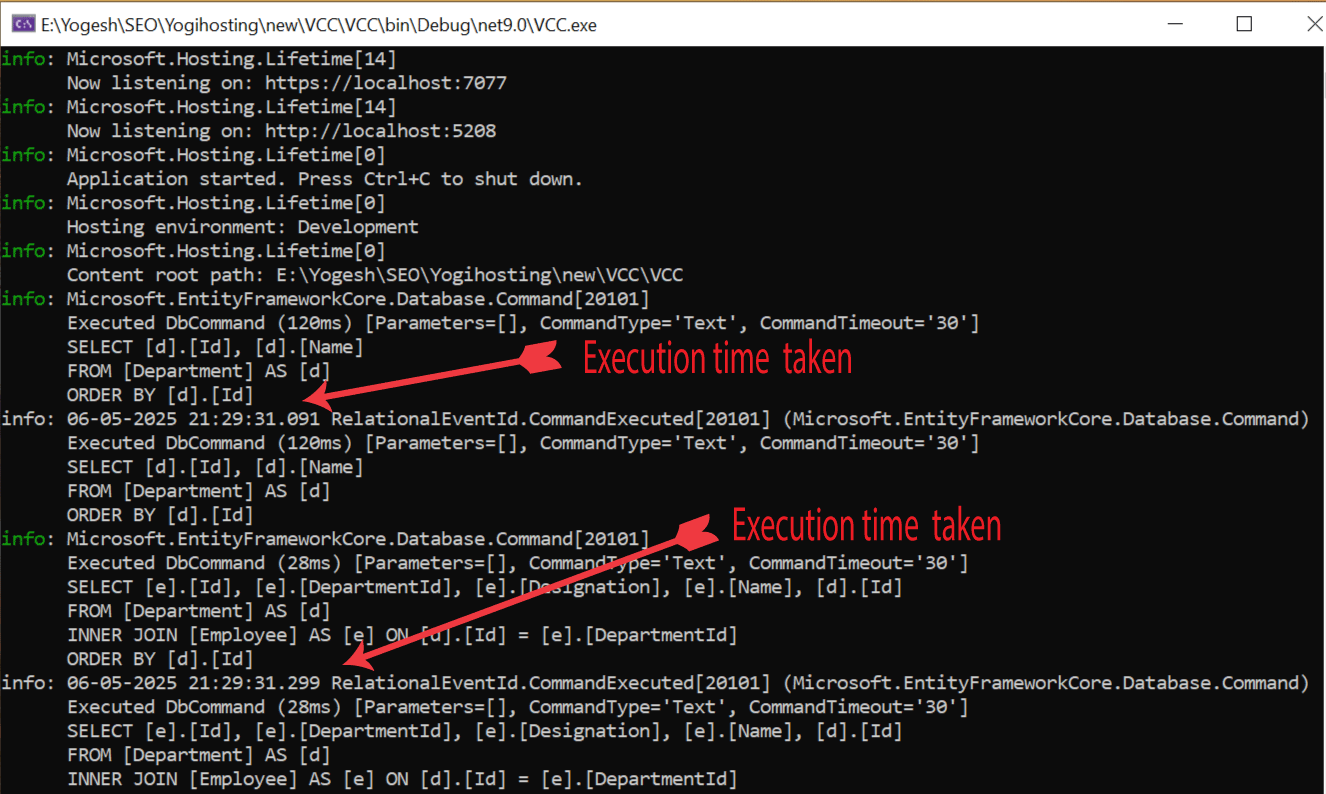

In the end of the day the Entity Framework Core will be executing SQL commands against the database. Seeing the logs can help in finding out which commands are taking more time for execution. EF Core Logging can be enabled in the program class during the time of registration of the DB Context. See the below code where we added the LogTo() method for enabling logging for EF Core.

builder.Services.AddDbContext<AppDbContext>(options => options.UseSqlServer(builder.Configuration.GetConnectionString("DefaultConnection")).LogTo(Console.WriteLine, LogLevel.Information));With this we will now see log messages for each command execution along with the time taken. Check the below screenshot.

Obviously there will be lots of SQL queries executing and finding the logs of each one can be a gruesome task. Therfore EF Core is provided by query tags feature, which allows to inject a small comment to identity the SQL query. The TagWith method is used to insert this comments on the queries. See below code.

var dept = await context.Department.TagWith("First query")

.Include(b => b.Employee).TagWith("Include related Employee")

.Include(b => b.Work).TagWith("Very slow execution, so check")

.AsSplitQuery()

.ToListAsync();The generated logs will now contain the comments to identity the queries easily. The logs for the above query is shown below.

info: 06-05-2025 21:56:12.383 RelationalEventId.CommandExecuted[20101] (Microsoft.EntityFrameworkCore.Database.Command)

Executed DbCommand (9ms) [Parameters=[], CommandType='Text', CommandTimeout='30']

-- First query

-- Include related Employee

-- Very slow execution, so check

SELECT [w].[Id], [w].[Completed], [w].[DepartmentId], [w].[Name], [w].[StartedOn], [d].[Id]

FROM [Department] AS [d]

INNER JOIN [Work] AS [w] ON [d].[Id] = [w].[DepartmentId]

ORDER BY [d].[Id]Benchmarking

The benchmarking library BenchmarkDotNet can be used to calculate performace of EF Core codes to identity areas for improvements. BenchmarkDotNet automatically runs each method for a sufficient number of iterations, and it finds out how long it takes to execute and how much memory is used.

In the below 2 method we are using benchmarking library to calculate performace of database reading of an entity in normal and with no tracking manner. Check the [Benchmark] attribute applied to the methods.

[Benchmark]

public async Task<IActionResult> ReadDatabase()

{

var dept = await context.Department

.Include(b => b.Employee)

.Include(b => b.Work)

.ToListAsync();

return View();

}

[Benchmark]

public async Task<IActionResult> ReadDatabaseNotracking()

{

var dept = await context.Department

.Include(b => b.Employee)

.Include(b => b.Work)

.AsNoTracking()

.ToListAsync();

return View();

}

Performance Topics

Let’s look at some of the ways by which we can improve the performace of our codes.

Query caching and parameterization

Entity Framework Core is going to create SQL queries from the LINQ queries it receives. To make this process faster, it caches LINQ query structure so that it can reuse the same structure for similar queries. This ensures faster executing of similar queries.

Below there are 2 LINQ queries which are almost same except the last filter part where 2 different names are used.

var dept1 = await context.Department.FirstOrDefaultAsync(p => p.Name == "Designing");

var dept2 = await context.Department.FirstOrDefaultAsync(p => p.Name == "Development");Here 2 different SQL queries are generated. Both queries are almost same.

SELECT TOP(1) [b].[Id], [b].[Name]

FROM [Department] AS [b]

WHERE [b].[Name] = N'Designing'

SELECT TOP(1) [b].[Id], [b].[Name]

FROM [Department] AS [b]

WHERE [b].[Name] = N'Development'What we can do here is to do a small modification and introduce a parameter which will make EF Core to use the same structure and speed the performance.

var name = "Designing";

var dept1 = await context.Department.FirstOrDefaultAsync(p => p.Name == Name);

name = "Development";

var dept2 = await context.Department.FirstOrDefaultAsync(p => p.Name == Name);Now only a single SQL query is generated and this will give a good speed boost.

SELECT TOP(1) [b].[Id], [b].[Name]

FROM [Department] AS [b]

WHERE [b].[Name] = @__postTitle_0Compiled models

When you have large models with large number of relationships then you can use complied models. To generate complied models use the below command. The –output-dir and –namespace options specify the directory and namespace into which the compiled model will be generated.

dotnet ef dbcontext optimize --output-dir MyCompiledModels --namespace MyCompiledModelsThen inside the OnConfiguring method we tell EF Core to use this complied model.

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder)

=> optionsBuilder

.UseModel(MyCompiledModels.ContextModel.Instance);ExecuteUpdate and ExecuteDelete

Batching together multiple statements to perform an update can be very useful since it removes the roundtrip. Example – see the below code where the salary of every employee is increased by $ 2000. If the company has 1000 employees then EF Core will perform 1000 roundtrips to the database to update the salary in the database for each of the employees.

foreach (var e in context.Employee)

{

e.Salary += 2000;

}

context.SaveChanges();We can avoid this roundtrip by using ExecuteUpdate method shown below.

await context.Employee.ExecuteUpdate(s => s.SetProperty(e => e.Salary, e => e.Salary + 2000));This will generate the update statement which only executes once and so will remove all roundtrips to the database.

UPDATE [Employees] SET [Salary] = [Salary] + 1000;The ExecuteDelete does a similar thing to delete records without the roundtrips. Check the below example which will have roundtrips to the database.

var books = context.Books.Where(x => x.AuthorName.Contains("Jack Sparrow"));

context.RemoveRange(books);

context.SaveChanges();The corresponding ExecuteDelete method code is given below.

var affectedRows = context.Books.Where(x => x.AuthorName.Contains("Jack Sparrow")).ExecuteDelete();In this tutorial we covered large number of Optimizing techniques for Entity Framework Core. These you can use to make your app lighweight and faster. Do share your thoughts on this topic.

Welcome to YogiHosting - A Programming Tutorial Website. It is used by millions of people around the world to learn and explore about ASP.NET Core, Blazor, jQuery, JavaScript, Docker, Kubernetes and other topics.

Welcome to YogiHosting - A Programming Tutorial Website. It is used by millions of people around the world to learn and explore about ASP.NET Core, Blazor, jQuery, JavaScript, Docker, Kubernetes and other topics.